Okay, so last week I was messing around with this ancient computer I found in my garage—seriously, it looked like a dusty brick. I booted it up, and man, this old game loaded slower than molasses. Got me thinking, why do some old systems feel like they’re dragging their feet while newer stuff flies? That’s when I remembered all the talk about 16-bit and 32-bit bits and bobs. I mean, I ain’t no engineer, but I figured I should just grab some gear and test it out myself to get the lowdown in plain talk. Too many folks throw around fancy words, and it feels like gibberish. So, I rolled up my sleeves and jumped in headfirst.

First off, I needed a simple way to see the difference without going nuts. I dug out two devices from my junk pile: this old 16-bit calculator thingy that could barely run anything, and a basic 32-bit tablet I got for free ages ago. Both were bare bones, no extra apps or mess. I wrote a little program—just a loop that added numbers over and over, like how you count sheep when you can’t sleep. Kept it stupid simple so I wouldn’t get lost. I used some free app on my laptop to code it, but no internet stuff, just local tinkering.

The Messy Setup

I started by plugging in the devices and making sure they were clean. That calculator was finicky—half the time, it wouldn’t even turn on without a whack. Once I got it running, I loaded my adding program onto both. Felt like dealing with stubborn kids. On the calculator, which is pure 16-bit, I hit run and just watched. Holy cow, it chugged through numbers like a tired old donkey. Took forever to finish, like minutes of waiting, and the screen flashed like it was struggling to keep up. I timed it with my phone’s stopwatch—no fancy tools here, just click and stare.

- Step one: Ran the same program on each device, one by one.

- Step two: Kept track of how long it took—that calculator crawled.

- Step three: Noticed the 16-bit thing felt overheated and noisy after just a few rounds.

Then I switched to the 32-bit tablet. Loaded the same adding loop and set it loose. Boom! It blasted through those numbers in seconds flat, smooth as butter. Barely broke a sweat, no extra heat or fuss. Measured the time again, and it was like night and day—way faster. But I wanted to see if it was just speed or something deeper. So I made the loop bigger, adding way more numbers this time. The 16-bit calculator? Yeah, it gave up the ghost pretty quick—froze up, screen went blank, I had to restart it. Total pain. The 32-bit one? Handled it like a champ, no hiccups.

What I Figured Out

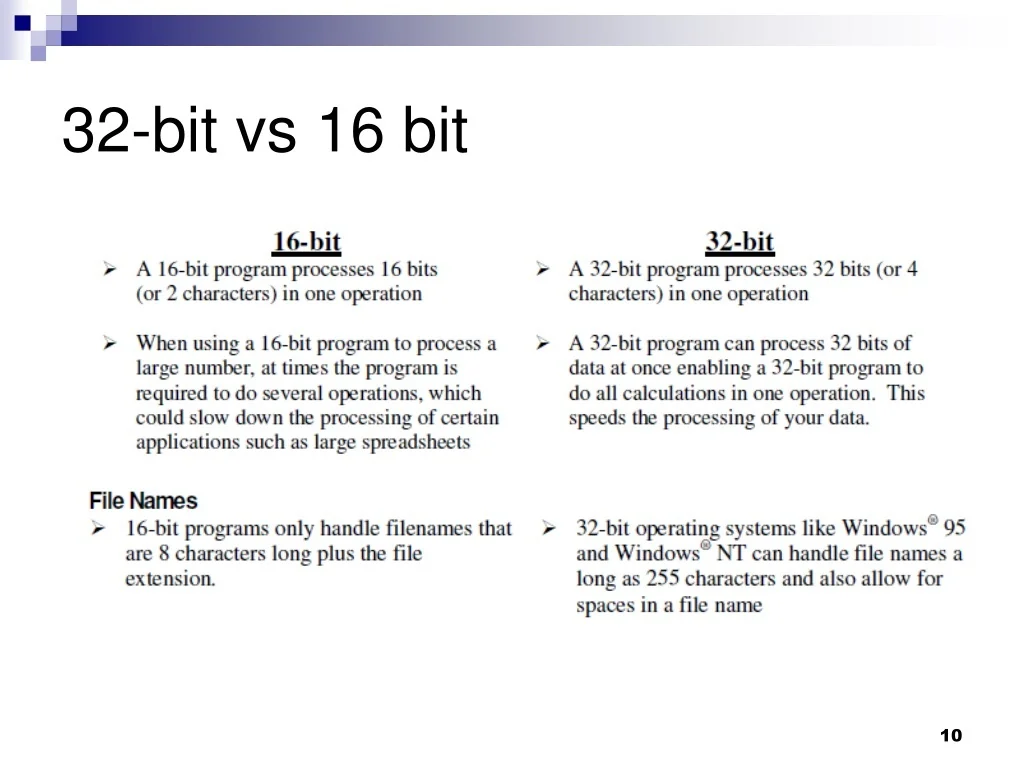

So after all that, it hit me: this bit stuff mostly comes down to how much stuff a system can handle in one go. The 16-bit one’s got a tiny toolbox—it can only juggle small jobs at once. That’s why it drags and chokes on bigger loads. But the 32-bit, it’s got a bigger toolbox, so it whizzes through more without breaking down. It’s like comparing a kid’s wagon to a pickup truck—both move, but one carries way more and faster. No rocket science needed.

Wrapping it up, I learned that with old gear, you gotta keep it simple or suffer the lag. Makes sense why that garage find felt so creaky. Honestly, I’m chucking that calculator in the bin now—good riddance! But it was a fun little brain scratch. Next time I buy tech, I’ll peek under the hood first.